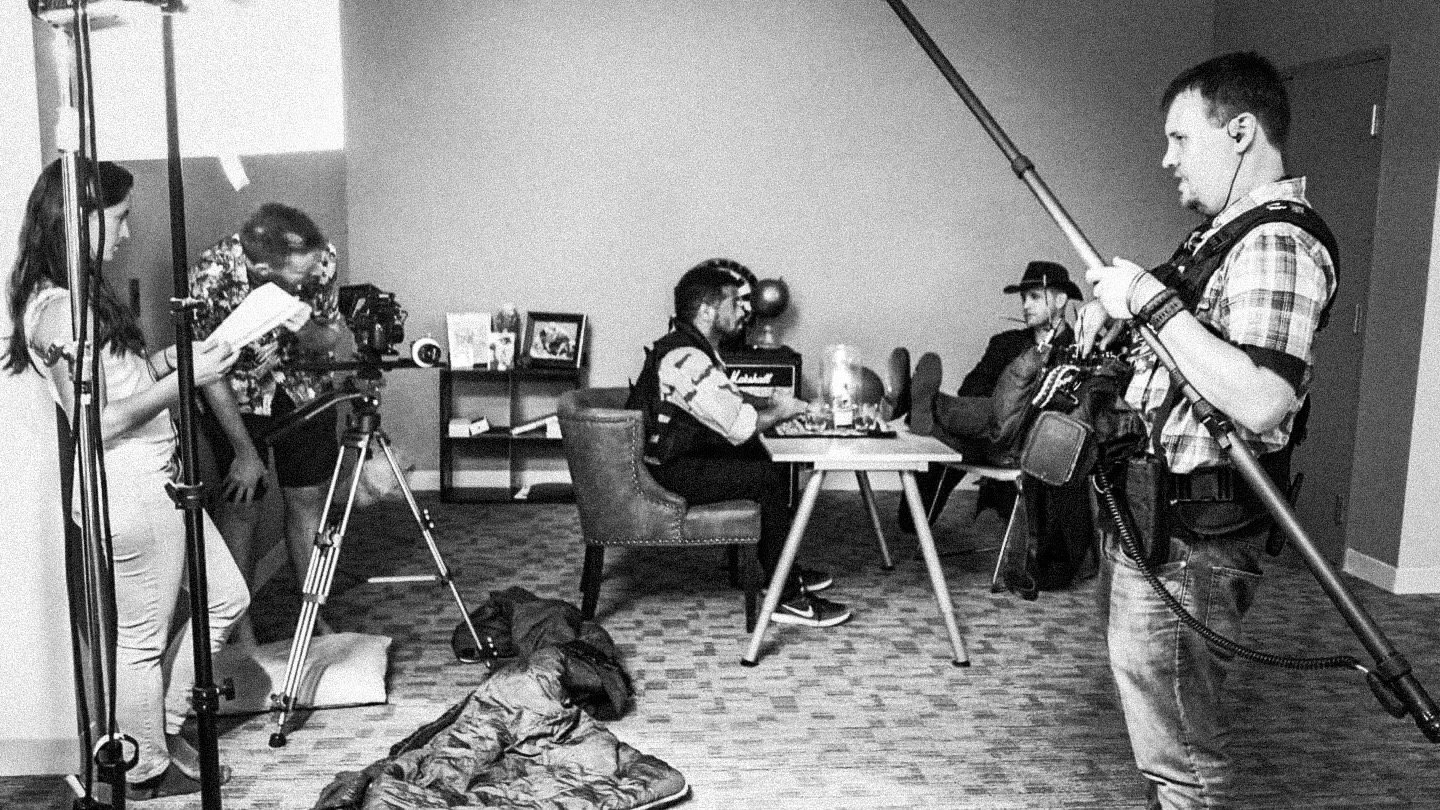

I don’t think we even had a script when we first started shooting, with most of the lines and performances improvised by the actors, and it turns out the go-go-go style of staying awake and powering through, which was my usual modus operandi for 24 Speed back then, really didn’t translate well to a full 48 hour stretch. There’s a scene where I get kicked and go down, and that happened at about hour 35, late on Saturday night, and I stayed down for a little while, just kind of exhausted.

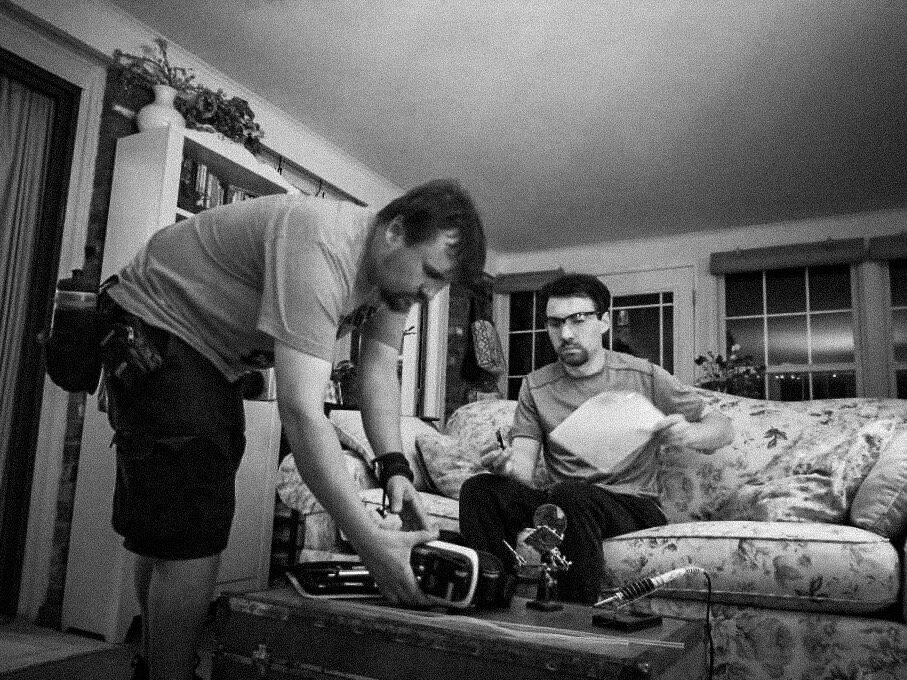

Things didn’t get any easier when we got to the edit. Our reach had definitely exceeded our grasp, and there were a couple of scenes we had planned that we didn’t ever get around to filming, so putting the short together felt like it was going to be impossible. Our Sunday deadline came and went, and the film still wasn’t done, and I felt myself sinking into despair, considering not even turning it in.

But a few hours after the deadline passed, I got a call from one of the editors at the 48HFP, who asked when we might be turning it in. I told him I wasn’t sure that I should, and he replied, “Don’t you want to turn in your movie? I mean, you’ll get to see it on the big screen.” I figured I owed it to my team to finish the project, so I agreed to meet up with him in person to drop it off via USB stick. When I got to his place, he was so chill, and nice, congratulating me on even just getting the film made, and it was exactly the pep talk I needed at that moment where I was feeling so down and like I’d failed.

Going into the actual screening a week or so later, I was terrified we would have the worst film there, having watched a lot of awesome and hilarious winning films from prior years. But stepping into the fantastic Art Deco style AFI Silver in Silver Spring for the first time, seeing our film on that gorgeous cinema screen, and hearing people laugh at our jokes, even a couple of ooohs and aaahs at our martial arts sequences, it changed things.

Yeah, this short film wasn’t our finest work, but a lot of the films that day were somewhat rough, and that was fine! It really felt like all of us were sharing in the joy of making something, rather than judging each other for where we missed the mark. It was an incredible, welcoming experience, and that’s something I’ve tried to carry in myself as a filmmaker ever since: to take part in the joy and creativity of filmmaking, and be similarly welcoming towards other filmmakers. Even someone relatively new to the craft may have an interesting, unexpected take on things. And I was hooked.